Compare commits

180 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

f855572ebf | |

|

|

cc9bbddfff | |

|

|

45bf6f6b65 | |

|

|

3e99dad408 | |

|

|

54d05e1330 | |

|

|

178168cc68 | |

|

|

c646c01789 | |

|

|

ad73a01a03 | |

|

|

22d6a9b137 | |

|

|

527b52cae2 | |

|

|

868a8fff99 | |

|

|

079cef0c2a | |

|

|

a677ccb018 | |

|

|

61397d616d | |

|

|

6940eae650 | |

|

|

ab08e54e37 | |

|

|

8757956636 | |

|

|

affdaa8fab | |

|

|

3443331501 | |

|

|

4353c84837 | |

|

|

b01382f267 | |

|

|

9d6a2be49e | |

|

|

39aada312c | |

|

|

87d59648cf | |

|

|

24d23ad230 | |

|

|

1c2bd2545c | |

|

|

bd3bff1f0d | |

|

|

01c50f4644 | |

|

|

99578f8577 | |

|

|

c2b4c38e2f | |

|

|

c6b29c2fab | |

|

|

070402eb1e | |

|

|

56f9aac92a | |

|

|

04f6881131 | |

|

|

2175231bc1 | |

|

|

669ceee48a | |

|

|

1704406cdf | |

|

|

fa65929c97 | |

|

|

8bc87a4b74 | |

|

|

4c210b9e52 | |

|

|

2a8bb7cf28 | |

|

|

52dc84cd13 | |

|

|

df80d2708b | |

|

|

4c04188ade | |

|

|

1271df5ca7 | |

|

|

577d914e93 | |

|

|

63f3901eaf | |

|

|

99f3220048 | |

|

|

d4b45a7a99 | |

|

|

ef71146e87 | |

|

|

cc1ff1c989 | |

|

|

5b68d7865e | |

|

|

d7c1cbf8ae | |

|

|

7b59e623ff | |

|

|

d1b5bd2958 | |

|

|

68c8ea0946 | |

|

|

49e63f753f | |

|

|

e734c51fe0 | |

|

|

b83ac15e68 | |

|

|

46c379bdaf | |

|

|

23d2c37486 | |

|

|

0ba450dcfe | |

|

|

8bb5fc9b23 | |

|

|

267c48962e | |

|

|

5d34eda4b9 | |

|

|

8424219bfc | |

|

|

de88ab6459 | |

|

|

2094e521e2 | |

|

|

bfac933203 | |

|

|

399139e6b4 | |

|

|

3051861a27 | |

|

|

36f2769d5e | |

|

|

a0eebfa6b6 | |

|

|

5ad725569d | |

|

|

8c93b88718 | |

|

|

df44ae75a5 | |

|

|

86595984a0 | |

|

|

906332e61d | |

|

|

72e9851922 | |

|

|

732e3381ba | |

|

|

c8ce5847d4 | |

|

|

bde9f7adec | |

|

|

43710c6324 | |

|

|

71f71d554c | |

|

|

1f6ec20685 | |

|

|

92b788862a | |

|

|

a515b9c1fd | |

|

|

8c9673a067 | |

|

|

4c84257fc1 | |

|

|

6c41ae40ac | |

|

|

f3782f08ad | |

|

|

e835902471 | |

|

|

786a21c61d | |

|

|

5e887ed479 | |

|

|

87987f72d1 | |

|

|

58529d659b | |

|

|

725553e501 | |

|

|

5034c3e82b | |

|

|

268c426349 | |

|

|

3e297d9643 | |

|

|

4228525dc3 | |

|

|

5e81860090 | |

|

|

f11a1105c9 | |

|

|

b1e4369f5d | |

|

|

779533a234 | |

|

|

c6f65211bd | |

|

|

6357080255 | |

|

|

9b8c8d9081 | |

|

|

bf19aee67d | |

|

|

4fe575fa85 | |

|

|

2a0dd80035 | |

|

|

b81f97e737 | |

|

|

0b3be0efca | |

|

|

7e01277dc2 | |

|

|

881fb6cba1 | |

|

|

1522931f6f | |

|

|

d14fb608d3 | |

|

|

abb37f17fd | |

|

|

cf770892f1 | |

|

|

1c2fb8db18 | |

|

|

64b94bfea5 | |

|

|

349e46739c | |

|

|

7f1c29df2d | |

|

|

318b7ebadc | |

|

|

8aba2a5612 | |

|

|

6bd2d0cf0e | |

|

|

4f8f3213c4 | |

|

|

3b237a0339 | |

|

|

ba05436fec | |

|

|

7a35d31c4b | |

|

|

df79746c71 | |

|

|

3e6284b04d | |

|

|

1964a0e488 | |

|

|

f9b263a718 | |

|

|

3640e4e70a | |

|

|

89f76b7f58 | |

|

|

837e934476 | |

|

|

7cbd77edc5 | |

|

|

36fd27c83c | |

|

|

06335058f3 | |

|

|

4448220085 | |

|

|

95e906a196 | |

|

|

4e2709468b | |

|

|

34277a3c67 | |

|

|

b5a442c042 | |

|

|

16752df99c | |

|

|

a2e9279189 | |

|

|

51ae29e851 | |

|

|

2a185a4119 | |

|

|

e70be5f158 | |

|

|

a38c65f265 | |

|

|

088e2a459f | |

|

|

42b786b7f3 | |

|

|

31056e8250 | |

|

|

ef34756046 | |

|

|

fd353d57cc | |

|

|

de857c6543 | |

|

|

c10ad67e43 | |

|

|

c9e1c22ee1 | |

|

|

5d3120e554 | |

|

|

41c0c3e3a0 | |

|

|

0ec5795f82 | |

|

|

12db8e001a | |

|

|

a6313ca406 | |

|

|

8bb1674bef | |

|

|

f81176b3dc | |

|

|

48b4da80e5 | |

|

|

0e4e979494 | |

|

|

5901071697 | |

|

|

ad71293f0a | |

|

|

5035a598ff | |

|

|

c5bbd11414 | |

|

|

eea35ce204 | |

|

|

3b1a2e67a7 | |

|

|

4af7c6a365 | |

|

|

615a36257b | |

|

|

be087c01cd | |

|

|

f31c40353c | |

|

|

15c826d03e | |

|

|

26da00f1a2 |

7

.flake8

|

|

@ -1,7 +0,0 @@

|

|||

[flake8]

|

||||

extend-ignore = E203, E266, E501

|

||||

# line length is intentionally set to 80 here because black uses Bugbear

|

||||

# See https://github.com/psf/black/blob/master/docs/the_black_code_style.md#line-length for more details

|

||||

max-line-length = 80

|

||||

max-complexity = 18

|

||||

select = B,C,E,F,W,T4,B9

|

||||

|

|

@ -0,0 +1,13 @@

|

|||

# These are supported funding model platforms

|

||||

|

||||

github: [nathom]

|

||||

patreon: # Replace with a single Patreon username

|

||||

open_collective: # Replace with a single Open Collective username

|

||||

ko_fi: # Replace with a single Ko-fi username

|

||||

tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

|

||||

community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

|

||||

liberapay: # Replace with a single Liberapay username

|

||||

issuehunt: # Replace with a single IssueHunt username

|

||||

otechie: # Replace with a single Otechie username

|

||||

lfx_crowdfunding: # Replace with a single LFX Crowdfunding project-name e.g., cloud-foundry

|

||||

custom: # Replace with up to 4 custom sponsorship URLs e.g., ['link1', 'link2']

|

||||

|

|

@ -36,9 +36,9 @@ body:

|

|||

attributes:

|

||||

label: Debug Traceback

|

||||

description: |

|

||||

Run your command, with `-vvv` appended to it, and paste the output here.

|

||||

Run your command with the `-vvv` option and paste the output here.

|

||||

For example, if the problematic command was `rip url https://example.com`, then

|

||||

you would run `rip url https://example.com -vvv` to get the debug logs.

|

||||

you would run `rip -vvv url https://example.com` to get the debug logs.

|

||||

Make sure to check the logs for any personal information such as emails and remove them.

|

||||

render: "text"

|

||||

placeholder: Logs printed to terminal screen

|

||||

|

|

@ -49,7 +49,7 @@ body:

|

|||

attributes:

|

||||

label: Config File

|

||||

description: |

|

||||

Find the config file using `rip config --open` and paste the contents here.

|

||||

Find the config file using `rip config open` and paste the contents here.

|

||||

Make sure you REMOVE YOUR CREDENTIALS!

|

||||

render: toml

|

||||

placeholder: Contents of config.toml

|

||||

|

|

|

|||

|

|

@ -1,17 +1,2 @@

|

|||

# Number of days of inactivity before an issue becomes stale

|

||||

daysUntilStale: 60

|

||||

# Number of days of inactivity before a stale issue is closed

|

||||

daysUntilClose: 7

|

||||

# Issues with these labels will never be considered stale

|

||||

exemptLabels:

|

||||

- pinned

|

||||

- security

|

||||

# Label to use when marking an issue as stale

|

||||

staleLabel: stale

|

||||

# Comment to post when marking an issue as stale. Set to `false` to disable

|

||||

markComment: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed if no further activity occurs. Thank you

|

||||

for your contributions.

|

||||

# Comment to post when closing a stale issue. Set to `false` to disable

|

||||

closeComment: false

|

||||

- name: Close Stale Issues

|

||||

uses: actions/stale@v9.0.0

|

||||

|

|

|

|||

|

|

@ -18,6 +18,6 @@ jobs:

|

|||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Build and publish to pypi

|

||||

uses: JRubics/poetry-publish@v1.6

|

||||

uses: JRubics/poetry-publish@v1.17

|

||||

with:

|

||||

pypi_token: ${{ secrets.PYPI_TOKEN }}

|

||||

|

|

|

|||

|

|

@ -0,0 +1,41 @@

|

|||

name: Python Poetry Test

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

- dev

|

||||

pull_request:

|

||||

branches:

|

||||

- main

|

||||

- dev

|

||||

|

||||

jobs:

|

||||

test:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

name: Check out repository code

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: '3.10' # Specify the Python version

|

||||

|

||||

- name: Install and configure Poetry

|

||||

uses: snok/install-poetry@v1

|

||||

with:

|

||||

version: 1.5.1

|

||||

virtualenvs-create: false

|

||||

virtualenvs-in-project: true

|

||||

installer-parallel: true

|

||||

|

||||

- name: Install dependencies

|

||||

run: poetry install

|

||||

|

||||

- name: Run tests

|

||||

run: poetry run pytest

|

||||

|

||||

- name: Success message

|

||||

if: success()

|

||||

run: echo "Tests passed successfully!"

|

||||

|

|

@ -0,0 +1,11 @@

|

|||

name: Ruff

|

||||

on: [push, pull_request]

|

||||

jobs:

|

||||

ruff:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: chartboost/ruff-action@v1

|

||||

- uses: chartboost/ruff-action@v1

|

||||

with:

|

||||

args: 'format --check'

|

||||

|

|

@ -20,3 +20,4 @@ StreamripDownloads

|

|||

*test.py

|

||||

/.mypy_cache

|

||||

.DS_Store

|

||||

pyrightconfig.json

|

||||

|

|

|

|||

|

|

@ -1,3 +0,0 @@

|

|||

[settings]

|

||||

multi_line_output=3

|

||||

include_trailing_comma=True

|

||||

53

.mypy.ini

|

|

@ -1,53 +0,0 @@

|

|||

[mypy-mutagen.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-tqdm.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-pathvalidate.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-pick.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-simple_term_menu.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-setuptools.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-requests.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-tomlkit.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-Crypto.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-Cryptodome.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-click.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-PIL.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-cleo.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-deezer.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-appdirs.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-m3u8.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-aiohttp.*]

|

||||

ignore_missing_imports = True

|

||||

|

||||

[mypy-aiofiles.*]

|

||||

ignore_missing_imports = True

|

||||

78

README.md

|

|

@ -1,26 +1,27 @@

|

|||

# streamrip

|

||||

|

||||

|

||||

[](https://pepy.tech/project/streamrip)

|

||||

[](https://github.com/python/black)

|

||||

|

||||

A scriptable stream downloader for Qobuz, Tidal, Deezer and SoundCloud.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Features

|

||||

|

||||

- Super fast, as it utilizes concurrent downloads and conversion

|

||||

- Fast, concurrent downloads powered by `aiohttp`

|

||||

- Downloads tracks, albums, playlists, discographies, and labels from Qobuz, Tidal, Deezer, and SoundCloud

|

||||

- Supports downloads of Spotify and Apple Music playlists through [last.fm](https://www.last.fm)

|

||||

- Automatically converts files to a preferred format

|

||||

- Has a database that stores the downloaded tracks' IDs so that repeats are avoided

|

||||

- Easy to customize with the config file

|

||||

- Concurrency and rate limiting

|

||||

- Interactive search for all sources

|

||||

- Highly customizable through the config file

|

||||

- Integration with `youtube-dl`

|

||||

|

||||

## Installation

|

||||

|

||||

First, ensure [Python](https://www.python.org/downloads/) (version 3.8 or greater) and [pip](https://pip.pypa.io/en/stable/installing/) are installed. If you are on Windows, install [Microsoft Visual C++ Tools](https://visualstudio.microsoft.com/visual-cpp-build-tools/). Then run the following in the command line:

|

||||

First, ensure [Python](https://www.python.org/downloads/) (version 3.10 or greater) and [pip](https://pip.pypa.io/en/stable/installing/) are installed. Then install `ffmpeg`. You may choose not to install this, but some functionality will be limited.

|

||||

|

||||

```bash

|

||||

pip3 install streamrip --upgrade

|

||||

|

|

@ -34,7 +35,16 @@ rip

|

|||

|

||||

it should show the main help page. If you have no idea what these mean, or are having other issues installing, check out the [detailed installation instructions](https://github.com/nathom/streamrip/wiki#detailed-installation-instructions).

|

||||

|

||||

If you would like to use `streamrip`'s conversion capabilities, download TIDAL videos, or download music from SoundCloud, install [ffmpeg](https://ffmpeg.org/download.html). To download music from YouTube, install [youtube-dl](https://github.com/ytdl-org/youtube-dl#installation).

|

||||

For Arch Linux users, an AUR package exists. Make sure to install required packages from the AUR before using `makepkg` or use an AUR helper to automatically resolve them.

|

||||

```

|

||||

git clone https://aur.archlinux.org/streamrip.git

|

||||

cd streamrip

|

||||

makepkg -si

|

||||

```

|

||||

or

|

||||

```

|

||||

paru -S streamrip

|

||||

```

|

||||

|

||||

### Streamrip beta

|

||||

|

||||

|

|

@ -61,17 +71,13 @@ Download multiple albums from Qobuz

|

|||

rip url https://www.qobuz.com/us-en/album/back-in-black-ac-dc/0886444889841 https://www.qobuz.com/us-en/album/blue-train-john-coltrane/0060253764852

|

||||

```

|

||||

|

||||

|

||||

|

||||

Download the album and convert it to `mp3`

|

||||

|

||||

```bash

|

||||

rip url --codec mp3 https://open.qobuz.com/album/0060253780968

|

||||

rip --codec mp3 url https://open.qobuz.com/album/0060253780968

|

||||

```

|

||||

|

||||

|

||||

|

||||

To set the maximum quality, use the `--max-quality` option to `0, 1, 2, 3, 4`:

|

||||

To set the maximum quality, use the `--quality` option to `0, 1, 2, 3, 4`:

|

||||

|

||||

| Quality ID | Audio Quality | Available Sources |

|

||||

| ---------- | --------------------- | -------------------------------------------- |

|

||||

|

|

@ -81,30 +87,24 @@ To set the maximum quality, use the `--max-quality` option to `0, 1, 2, 3, 4`:

|

|||

| 3 | 24 bit, ≤ 96 kHz | Tidal (MQA), Qobuz, SoundCloud (rarely) |

|

||||

| 4 | 24 bit, ≤ 192 kHz | Qobuz |

|

||||

|

||||

|

||||

|

||||

```bash

|

||||

rip url --max-quality 3 https://tidal.com/browse/album/147569387

|

||||

rip --quality 3 url https://tidal.com/browse/album/147569387

|

||||

```

|

||||

|

||||

Search for albums matching `lil uzi vert` on SoundCloud

|

||||

> Using `4` is generally a waste of space. It is impossible for humans to perceive the difference between sampling rates higher than 44.1 kHz. It may be useful if you're processing/slowing down the audio.

|

||||

|

||||

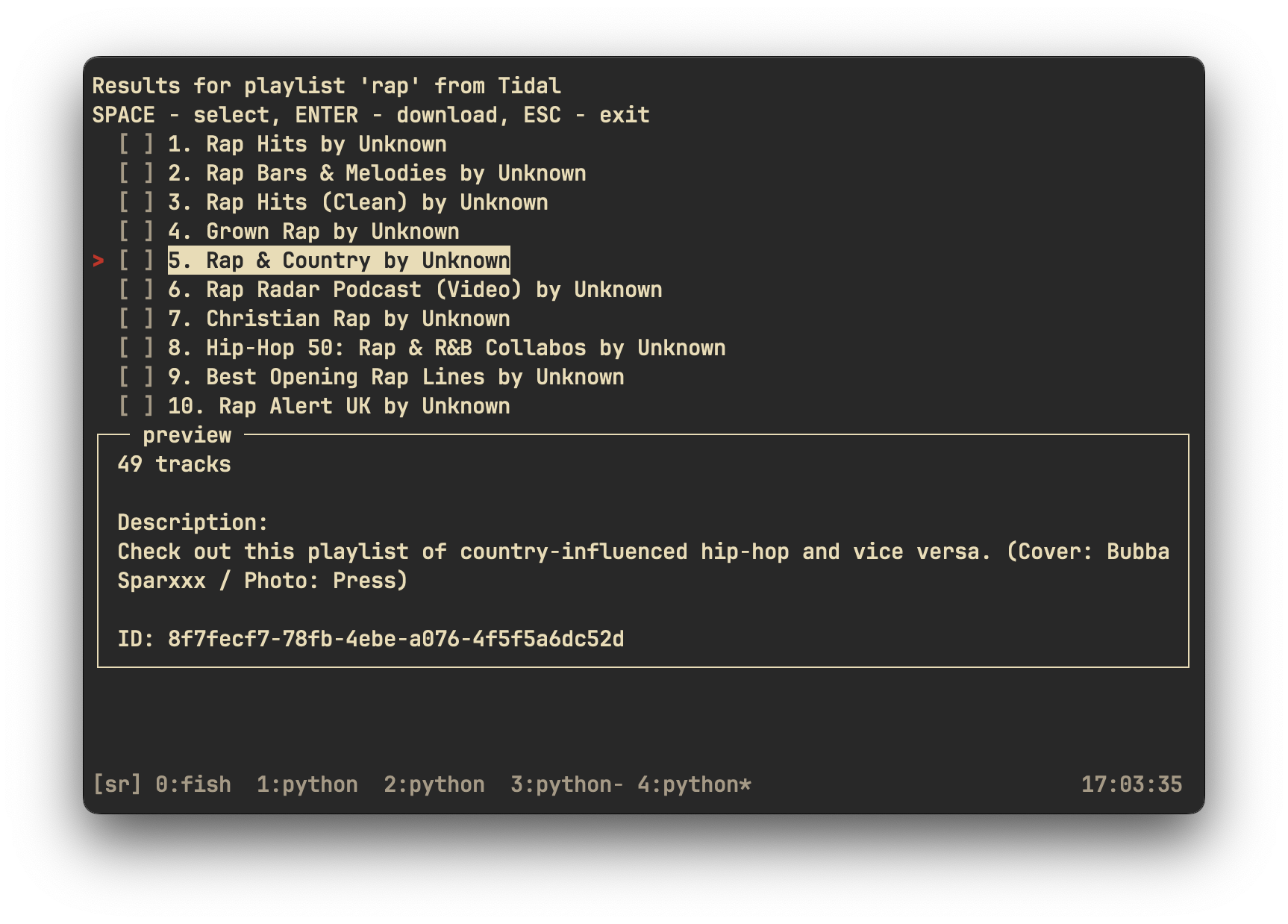

Search for playlists matching `rap` on Tidal

|

||||

|

||||

```bash

|

||||

rip search --source soundcloud 'lil uzi vert'

|

||||

rip search tidal playlist 'rap'

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

Search for *Rumours* on Tidal, and download it

|

||||

|

||||

```bash

|

||||

rip search 'fleetwood mac rumours'

|

||||

```

|

||||

|

||||

Want to find some new music? Use the `discover` command (only on Qobuz)

|

||||

|

||||

```bash

|

||||

rip discover --list 'best-sellers'

|

||||

rip search tidal album 'fleetwood mac rumours'

|

||||

```

|

||||

|

||||

Download a last.fm playlist using the lastfm command

|

||||

|

|

@ -113,18 +113,16 @@ Download a last.fm playlist using the lastfm command

|

|||

rip lastfm https://www.last.fm/user/nathan3895/playlists/12126195

|

||||

```

|

||||

|

||||

For extreme customization, see the config file

|

||||

For more customization, see the config file

|

||||

|

||||

```

|

||||

rip config --open

|

||||

rip config open

|

||||

```

|

||||

|

||||

|

||||

|

||||

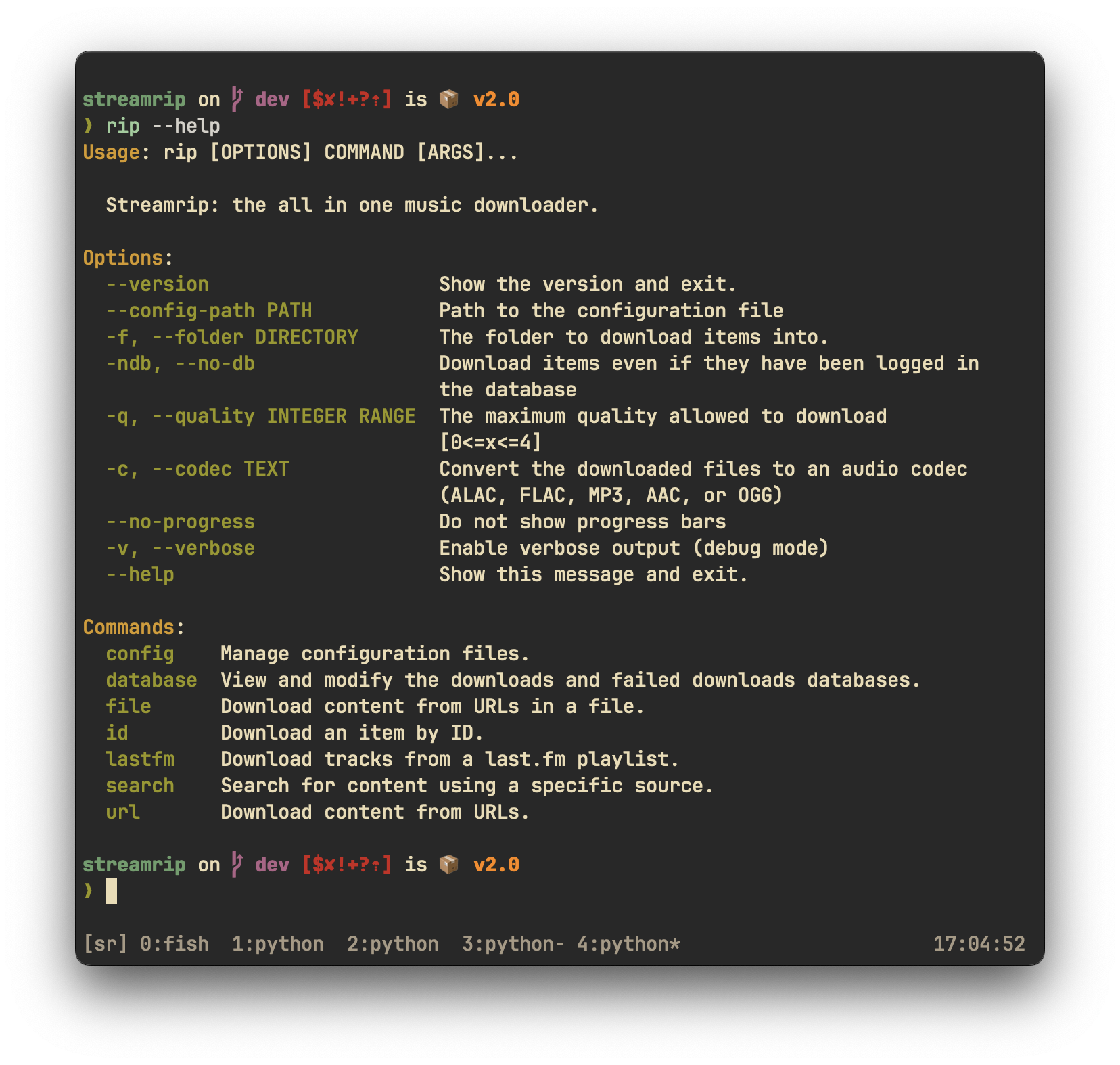

If you're confused about anything, see the help pages. The main help pages can be accessed by typing `rip` by itself in the command line. The help pages for each command can be accessed with the `-h` flag. For example, to see the help page for the `url` command, type

|

||||

If you're confused about anything, see the help pages. The main help pages can be accessed by typing `rip` by itself in the command line. The help pages for each command can be accessed with the `--help` flag. For example, to see the help page for the `url` command, type

|

||||

|

||||

```

|

||||

rip url -h

|

||||

rip url --help

|

||||

```

|

||||

|

||||

|

||||

|

|

@ -133,7 +131,6 @@ rip url -h

|

|||

|

||||

For more in-depth information about `streamrip`, see the help pages and the [wiki](https://github.com/nathom/streamrip/wiki/).

|

||||

|

||||

|

||||

## Contributions

|

||||

|

||||

All contributions are appreciated! You can help out the project by opening an issue

|

||||

|

|

@ -158,7 +155,7 @@ Please document any functions or obscure lines of code.

|

|||

|

||||

### The Wiki

|

||||

|

||||

To help out `streamrip` users that may be having trouble, consider contributing some information to the wiki.

|

||||

To help out `streamrip` users that may be having trouble, consider contributing some information to the wiki.

|

||||

Nothing is too obvious and everything is appreciated.

|

||||

|

||||

## Acknowledgements

|

||||

|

|

@ -172,17 +169,10 @@ Thanks to Vitiko98, Sorrow446, and DashLt for their contributions to this projec

|

|||

- [Tidal-Media-Downloader](https://github.com/yaronzz/Tidal-Media-Downloader)

|

||||

- [scdl](https://github.com/flyingrub/scdl)

|

||||

|

||||

|

||||

|

||||

## Disclaimer

|

||||

|

||||

I will not be responsible for how **you** use `streamrip`. By using `streamrip`, you agree to the terms and conditions of the Qobuz, Tidal, and Deezer APIs.

|

||||

|

||||

I will not be responsible for how you use `streamrip`. By using `streamrip`, you agree to the terms and conditions of the Qobuz, Tidal, and Deezer APIs.

|

||||

## Sponsorship

|

||||

|

||||

## Donations/Sponsorship

|

||||

|

||||

<a href="https://www.buymeacoffee.com/nathom" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/default-orange.png" alt="Buy Me A Coffee" height="41" width="174"></a>

|

||||

|

||||

|

||||

Consider contributing some funds [here](https://www.buymeacoffee.com/nathom), which will go towards holding

|

||||

the premium subscriptions that I need to debug and improve streamrip. Thanks for your support!

|

||||

Consider becoming a Github sponsor for me if you enjoy my open source software.

|

||||

|

|

|

|||

|

Before Width: | Height: | Size: 325 KiB |

|

Before Width: | Height: | Size: 148 KiB |

|

Before Width: | Height: | Size: 292 KiB After Width: | Height: | Size: 377 KiB |

|

Before Width: | Height: | Size: 407 KiB After Width: | Height: | Size: 641 KiB |

|

|

@ -0,0 +1,137 @@

|

|||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<!-- Generator: Adobe Illustrator 27.3.1, SVG Export Plug-In . SVG Version: 6.00 Build 0) -->

|

||||

<svg version="1.1" id="Layer_1" xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" x="0px" y="0px"

|

||||

viewBox="0 0 768 256" style="enable-background:new 0 0 768 256;" xml:space="preserve">

|

||||

<style type="text/css">

|

||||

.st0{fill:#252525;}

|

||||

.st1{fill:#E9D7B0;}

|

||||

.st2{fill:#FFFFFF;}

|

||||

.st3{fill:url(#SVGID_1_);}

|

||||

.st4{fill:url(#SVGID_00000055683228248778521670000006510520551845247645_);}

|

||||

.st5{fill:url(#SVGID_00000158743434889325090190000000134816785925054387_);}

|

||||

.st6{fill:url(#SVGID_00000076587668925285995460000015083660019040157360_);}

|

||||

.st7{fill:url(#SVGID_00000065791897104437684560000004534853821863457943_);}

|

||||

.st8{fill:url(#SVGID_00000178914817404700868070000017136081737691720877_);}

|

||||

</style>

|

||||

<rect class="st0" width="768" height="256"/>

|

||||

<g id="_x23_e9d7b0ff">

|

||||

<path class="st1" d="M93.39,83.44c13.36-5.43,28.85-5.44,42.21,0c15.49,5.93,28.38,21.3,27.02,38.48

|

||||

c-0.74,14.18-11.01,26.26-23.41,32.34c-2.91,0.98-1.75,4.44-0.87,6.49c0.96,3.43,3.24,7.74,0.33,10.83

|

||||

c-3.16,3.12-8.93,2.28-11.58-1.18c-2.64,4.15-9.77,4.18-12.42,0.06c-3.06,3.86-9.98,4.28-12.78-0.09c-2.8,3.9-9.73,4.44-12.36,0.12

|

||||

c-2.18-5.21,3.39-10.37,1.64-15.39c-5.03-2.9-10.16-5.83-14.16-10.13c-8.48-8.48-12.72-21.27-9.79-33.04

|

||||

C70.22,98.67,81.06,88.37,93.39,83.44 M85.88,106c-0.12,9.66-0.05,19.32-0.04,28.99c-0.29,1.43,1.67,2.49,2.75,1.6

|

||||

c8.39-4.91,16.87-9.71,25.14-14.8c0.07-0.43,0.19-1.28,0.25-1.71c-0.71-1.02-1.95-1.44-2.94-2.11c-7.67-4.44-15.27-9-22.97-13.38

|

||||

l-1-0.02C86.77,104.93,86.18,105.64,85.88,106 M116.47,107.51c-0.11,0.35-0.33,1.03-0.45,1.37c4.97,8.7,9.87,17.49,15.13,26

|

||||

c0.47,0.04,1.39,0.11,1.86,0.15c5.31-8.16,9.87-16.87,14.85-25.26c1.17-1.23-0.24-3.31-1.8-2.9c-9.69,0.07-19.39-0.17-29.06,0.12

|

||||

L116.47,107.51 M108.66,152.02c3.93,0.09,7.87,0.05,11.8,0.02l0.28-0.79c-1.94-3.36-3.83-6.75-5.87-10.05

|

||||

C112.38,144.46,110.11,148.18,108.66,152.02z"/>

|

||||

<path class="st1" d="M576.8,106.02c7.41-0.05,14.82,0,22.23-0.03c5.88,0.13,12.29,2.07,15.81,7.11c5.54,8.26,2.67,21.48-7.11,25.1

|

||||

c4.12,7.54,8.16,15.13,12.08,22.78c-4.18,0.02-8.36,0.07-12.52-0.03c-3.44-6.64-6.72-13.35-10.26-19.94

|

||||

c-2.95-0.02-5.89-0.04-8.82,0.02c-0.01,6.65,0.01,13.3-0.01,19.96c-3.8,0.02-7.6,0.02-11.4-0.01

|

||||

C576.78,142.66,576.78,124.34,576.8,106.02 M588.21,115.03c0,5.65-0.01,11.3,0,16.95c4.55-0.31,9.38,0.89,13.68-0.96

|

||||

c5.29-2.47,5.64-10.71,1.37-14.29C598.83,113.73,593.22,115.42,588.21,115.03z"/>

|

||||

<path class="st1" d="M634.46,106.09c3.8-0.2,7.62-0.06,11.44-0.07c0.01,18.32,0.01,36.64,0,54.96c-3.72,0.04-7.43,0.01-11.13,0.02

|

||||

l-0.31-0.36C634.52,142.46,634.51,124.27,634.46,106.09z"/>

|

||||

<path class="st1" d="M663.8,106.01c8.13-0.03,16.26,0,24.39-0.01c4.97,0.61,10.12,2.42,13.55,6.32c6.42,7.2,5.48,20.24-3.06,25.4

|

||||

c-7.01,4.5-15.6,3.01-23.47,3.3c-0.01,6.66,0.01,13.31-0.01,19.97c-3.8,0.02-7.6,0.02-11.4,0

|

||||

C663.78,142.66,663.78,124.34,663.8,106.01 M675.21,115.02c-0.01,5.65,0,11.3,0,16.95c4.89-0.21,9.96,0.7,14.67-0.71

|

||||

c6.17-2.5,5.84-12.44,0.04-15.21C685.29,114.05,680.1,115.36,675.21,115.02z"/>

|

||||

</g>

|

||||

<g>

|

||||

<g>

|

||||

<path class="st2" d="M222.37,146.41c-0.37-7.72-6.82-9.57-14.47-11.47c-14.79-1.47-26.9-14.31-13.76-26.02

|

||||

c10.72-8.47,34.44-4.24,33.98,12.08h-6.05c0.06-18.2-38.39-11.92-24.28,4.72c9.12,5.92,31.39,4.84,30.63,20.39

|

||||

c-0.31,21.37-41.72,19.73-41.49-1.11h6.05C193.13,159.27,221.37,160.43,222.37,146.41z"/>

|

||||

<path class="st2" d="M262,91v14h12v5h-12v36.83c-0.4,8.19,4.63,10.28,11.94,8.58l0.25,4.96c-9.68,3.27-19.28-1.68-18.21-13.53

|

||||

c0,0,0-36.84,0-36.84H246v-5h10V91H262z"/>

|

||||

<path class="st2" d="M322.64,110.18c-28.51-4.75-17.52,34.44-19.54,49.82H297v-55h6l0.1,8.74c3.48-7.59,11.86-11.46,19.69-9.1

|

||||

L322.64,110.18z"/>

|

||||

<path class="st2" d="M363.41,161.08c-21.91,0.67-29.94-26.47-21.56-43.37c13.58-25.28,45.68-13.11,42.17,15.29h-39.2

|

||||

c-2.08,19.59,21.82,31.69,34.23,15.49l3.81,2.89C378.4,157.85,371.91,161.08,363.41,161.08z M362.3,109.46

|

||||

c-10.12,0.02-16.16,8.52-17.27,18.54h32.96C378.08,118.24,372.38,109.36,362.3,109.46z"/>

|

||||

<path class="st2" d="M441.23,160c-0.61-1.74-1.01-4.31-1.17-7.71c-9.1,13.08-36.59,11.79-36.58-6.95

|

||||

c0.24-17.92,22.23-17.99,36.53-17.47v-6.28c1.4-16.62-28.25-16.84-29.07-1.58l-6.13-0.05c0.21-15.94,25.54-20.87,35.78-11.62

|

||||

c11.18,9.79,1.91,39.18,7.18,51.67H441.23z M422.12,155.83c7.6,0.09,14.88-3.96,17.88-10.82c0,0,0-12.09,0-12.09

|

||||

c-7.25-0.03-19.87-0.62-25.09,3.33C404.85,142.2,410.94,156.83,422.12,155.83z"/>

|

||||

<path class="st2" d="M478.75,105l0.2,8.95c6.5-12.93,29.79-13.88,33.75,0.71c3.8-6.84,10.76-10.71,18.45-10.67

|

||||

c26.44-1.62,15.64,39.94,17.75,56.02H544v-36.21c-0.15-9.53-3.53-14.45-13.15-14.47c-8.1-0.07-15.28,6.23-15.85,14.32

|

||||

c0,0,0,36.36,0,36.36h-7v-36.66c-0.09-9.21-4.06-14-13.25-14.02c-8.11,0.03-13.36,4.99-15.75,13c0,0,0,37.68,0,37.68h-6v-55

|

||||

H478.75z"/>

|

||||

</g>

|

||||

<g>

|

||||

<linearGradient id="SVGID_1_" gradientUnits="userSpaceOnUse" x1="186.9279" y1="133.0317" x2="550.2825" y2="133.0317">

|

||||

<stop offset="0" style="stop-color:#FCAA62"/>

|

||||

<stop offset="0.148" style="stop-color:#EF9589"/>

|

||||

<stop offset="0.2709" style="stop-color:#E588A4"/>

|

||||

<stop offset="0.4218" style="stop-color:#D17AC7"/>

|

||||

<stop offset="0.5335" style="stop-color:#AC83D0"/>

|

||||

<stop offset="0.8547" style="stop-color:#4DBFC8"/>

|

||||

</linearGradient>

|

||||

<path class="st3" d="M222.37,146.41c-0.37-7.72-6.82-9.57-14.47-11.47c-14.79-1.47-26.9-14.31-13.76-26.02

|

||||

c10.72-8.47,34.44-4.24,33.98,12.08h-6.05c0.06-18.2-38.39-11.92-24.28,4.72c9.12,5.92,31.39,4.84,30.63,20.39

|

||||

c-0.31,21.37-41.72,19.73-41.49-1.11h6.05C193.13,159.27,221.37,160.43,222.37,146.41z"/>

|

||||

|

||||

<linearGradient id="SVGID_00000103946862931371191510000000729465072844906368_" gradientUnits="userSpaceOnUse" x1="191.9648" y1="126.1764" x2="544.4528" y2="126.1764">

|

||||

<stop offset="0" style="stop-color:#FCAA62"/>

|

||||

<stop offset="0.148" style="stop-color:#EF9589"/>

|

||||

<stop offset="0.2709" style="stop-color:#E588A4"/>

|

||||

<stop offset="0.4218" style="stop-color:#D17AC7"/>

|

||||

<stop offset="0.5335" style="stop-color:#AC83D0"/>

|

||||

<stop offset="0.8547" style="stop-color:#4DBFC8"/>

|

||||

</linearGradient>

|

||||

<path style="fill:url(#SVGID_00000103946862931371191510000000729465072844906368_);" d="M262,91v14h12v5h-12v36.83

|

||||

c-0.4,8.19,4.63,10.28,11.94,8.58l0.25,4.96c-9.68,3.27-19.28-1.68-18.21-13.53c0,0,0-36.84,0-36.84H246v-5h10V91H262z"/>

|

||||

|

||||

<linearGradient id="SVGID_00000176721575697468656650000009150589237067441335_" gradientUnits="userSpaceOnUse" x1="190.9367" y1="131.9768" x2="549.0467" y2="131.9768">

|

||||

<stop offset="0" style="stop-color:#FCAA62"/>

|

||||

<stop offset="0.148" style="stop-color:#EF9589"/>

|

||||

<stop offset="0.2709" style="stop-color:#E588A4"/>

|

||||

<stop offset="0.4218" style="stop-color:#D17AC7"/>

|

||||

<stop offset="0.5335" style="stop-color:#AC83D0"/>

|

||||

<stop offset="0.8547" style="stop-color:#4DBFC8"/>

|

||||

</linearGradient>

|

||||

<path style="fill:url(#SVGID_00000176721575697468656650000009150589237067441335_);" d="M322.64,110.18

|

||||

c-28.51-4.75-17.52,34.44-19.54,49.82H297v-55h6l0.1,8.74c3.48-7.59,11.86-11.46,19.69-9.1L322.64,110.18z"/>

|

||||

|

||||

<linearGradient id="SVGID_00000079455930380207591750000003505925285802537364_" gradientUnits="userSpaceOnUse" x1="196.2564" y1="132.5227" x2="548.9808" y2="132.5227">

|

||||

<stop offset="0" style="stop-color:#FCAA62"/>

|

||||

<stop offset="0.148" style="stop-color:#EF9589"/>

|

||||

<stop offset="0.2709" style="stop-color:#E588A4"/>

|

||||

<stop offset="0.4218" style="stop-color:#D17AC7"/>

|

||||

<stop offset="0.5335" style="stop-color:#AC83D0"/>

|

||||

<stop offset="0.8547" style="stop-color:#4DBFC8"/>

|

||||

</linearGradient>

|

||||

<path style="fill:url(#SVGID_00000079455930380207591750000003505925285802537364_);" d="M363.41,161.08

|

||||

c-21.91,0.67-29.94-26.47-21.56-43.37c13.58-25.28,45.68-13.11,42.17,15.29h-39.2c-2.08,19.59,21.82,31.69,34.23,15.49l3.81,2.89

|

||||

C378.4,157.85,371.91,161.08,363.41,161.08z M362.3,109.46c-10.12,0.02-16.16,8.52-17.27,18.54h32.96

|

||||

C378.08,118.24,372.38,109.36,362.3,109.46z"/>

|

||||

|

||||

<linearGradient id="SVGID_00000027569275093958031240000016844973093868673714_" gradientUnits="userSpaceOnUse" x1="190.8339" y1="132.3138" x2="549.086" y2="132.3138">

|

||||

<stop offset="0" style="stop-color:#FCAA62"/>

|

||||

<stop offset="0.148" style="stop-color:#EF9589"/>

|

||||

<stop offset="0.2709" style="stop-color:#E588A4"/>

|

||||

<stop offset="0.4218" style="stop-color:#D17AC7"/>

|

||||

<stop offset="0.5335" style="stop-color:#AC83D0"/>

|

||||

<stop offset="0.8547" style="stop-color:#4DBFC8"/>

|

||||

</linearGradient>

|

||||

<path style="fill:url(#SVGID_00000027569275093958031240000016844973093868673714_);" d="M441.23,160

|

||||

c-0.61-1.74-1.01-4.31-1.17-7.71c-9.1,13.08-36.59,11.79-36.58-6.95c0.24-17.92,22.23-17.99,36.53-17.47v-6.28

|

||||

c1.4-16.62-28.25-16.84-29.07-1.58l-6.13-0.05c0.21-15.94,25.54-20.87,35.78-11.62c11.18,9.79,1.91,39.18,7.18,51.67H441.23z

|

||||

M422.12,155.83c7.6,0.09,14.88-3.96,17.88-10.82c0,0,0-12.09,0-12.09c-7.25-0.03-19.87-0.62-25.09,3.33

|

||||

C404.85,142.2,410.94,156.83,422.12,155.83z"/>

|

||||

|

||||

<linearGradient id="SVGID_00000170236692072998314540000006170404067406300812_" gradientUnits="userSpaceOnUse" x1="197.2366" y1="131.9687" x2="549.7985" y2="131.9687">

|

||||

<stop offset="0" style="stop-color:#FCAA62"/>

|

||||

<stop offset="0.148" style="stop-color:#EF9589"/>

|

||||

<stop offset="0.2709" style="stop-color:#E588A4"/>

|

||||

<stop offset="0.4218" style="stop-color:#D17AC7"/>

|

||||

<stop offset="0.5335" style="stop-color:#AC83D0"/>

|

||||

<stop offset="0.8547" style="stop-color:#4DBFC8"/>

|

||||

</linearGradient>

|

||||

<path style="fill:url(#SVGID_00000170236692072998314540000006170404067406300812_);" d="M478.75,105l0.2,8.95

|

||||

c6.5-12.93,29.79-13.88,33.75,0.71c3.8-6.84,10.76-10.71,18.45-10.67c26.44-1.62,15.64,39.94,17.75,56.02H544v-36.21

|

||||

c-0.15-9.53-3.53-14.45-13.15-14.47c-8.1-0.07-15.28,6.23-15.85,14.32c0,0,0,36.36,0,36.36h-7v-36.66

|

||||

c-0.09-9.21-4.06-14-13.25-14.02c-8.11,0.03-13.36,4.99-15.75,13c0,0,0,37.68,0,37.68h-6v-55H478.75z"/>

|

||||

</g>

|

||||

</g>

|

||||

</svg>

|

||||

|

After Width: | Height: | Size: 9.9 KiB |

|

After Width: | Height: | Size: 475 KiB |

|

|

@ -1,62 +1,91 @@

|

|||

[tool.poetry]

|

||||

name = "streamrip"

|

||||

version = "1.9.5"

|

||||

version = "2.0.5"

|

||||

description = "A fast, all-in-one music ripper for Qobuz, Deezer, Tidal, and SoundCloud"

|

||||

authors = ["nathom <nathanthomas707@gmail.com>"]

|

||||

license = "GPL-3.0-only"

|

||||

readme = "README.md"

|

||||

homepage = "https://github.com/nathom/streamrip"

|

||||

repository = "https://github.com/nathom/streamrip"

|

||||

include = ["streamrip/config.toml"]

|

||||

packages = [

|

||||

{ include = "streamrip" },

|

||||

{ include = "rip" },

|

||||

]

|

||||

include = ["src/config.toml"]

|

||||

keywords = ["hi-res", "free", "music", "download"]

|

||||

classifiers = [

|

||||

"License :: OSI Approved :: GNU General Public License (GPL)",

|

||||

"Operating System :: OS Independent",

|

||||

"License :: OSI Approved :: GNU General Public License (GPL)",

|

||||

"Operating System :: OS Independent",

|

||||

]

|

||||

packages = [{ include = "streamrip" }]

|

||||

|

||||

[tool.poetry.scripts]

|

||||

rip = "rip.cli:main"

|

||||

rip = "streamrip.rip:rip"

|

||||

|

||||

[tool.poetry.dependencies]

|

||||

python = ">=3.8 <4.0"

|

||||

requests = "^2.25.1"

|

||||

python = ">=3.10 <4.0"

|

||||

mutagen = "^1.45.1"

|

||||

click = "^8.0.1"

|

||||

tqdm = "^4.61.1"

|

||||

tomlkit = "^0.7.2"

|

||||

pathvalidate = "^2.4.1"

|

||||

simple-term-menu = {version = "^1.2.1", platform = 'darwin|linux'}

|

||||

pick = {version = "^1.0.0", platform = 'win32 or cygwin'}

|

||||

windows-curses = {version = "^2.2.0", platform = 'win32|cygwin'}

|

||||

Pillow = "^9.0.0"

|

||||

simple-term-menu = { version = "^1.2.1", platform = 'darwin|linux' }

|

||||

pick = { version = "^2", platform = 'win32|cygwin' }

|

||||

windows-curses = { version = "^2.2.0", platform = 'win32|cygwin' }

|

||||

Pillow = ">=9,<11"

|

||||

deezer-py = "1.3.6"

|

||||

pycryptodomex = "^3.10.1"

|

||||

cleo = {version = "1.0.0a4", allow-prereleases = true}

|

||||

appdirs = "^1.4.4"

|

||||

m3u8 = "^0.9.0"

|

||||

aiofiles = "^0.7.0"

|

||||

aiohttp = "^3.7.4"

|

||||

cchardet = "^2.1.7"

|

||||

aiofiles = "^0.7"

|

||||

aiohttp = "^3.9"

|

||||

aiodns = "^3.0.0"

|

||||

aiolimiter = "^1.1.0"

|

||||

pytest-mock = "^3.11.1"

|

||||

pytest-asyncio = "^0.21.1"

|

||||

rich = "^13.6.0"

|

||||

click-help-colors = "^0.9.2"

|

||||

|

||||

[tool.poetry.urls]

|

||||

"Bug Reports" = "https://github.com/nathom/streamrip/issues"

|

||||

|

||||

[tool.poetry.dev-dependencies]

|

||||

Sphinx = "^4.1.1"

|

||||

autodoc = "^0.5.0"

|

||||

types-click = "^7.1.2"

|

||||

types-Pillow = "^8.3.1"

|

||||

black = "^21.7b0"

|

||||

ruff = "^0.1"

|

||||

black = "^24"

|

||||

isort = "^5.9.3"

|

||||

flake8 = "^3.9.2"

|

||||

setuptools = "^58.0.4"

|

||||

pytest = "^6.2.5"

|

||||

setuptools = "^67.4.0"

|

||||

pytest = "^7.4"

|

||||

|

||||

[tool.pytest.ini_options]

|

||||

minversion = "6.0"

|

||||

addopts = "-ra -q"

|

||||

testpaths = ["tests"]

|

||||

log_level = "DEBUG"

|

||||

asyncio_mode = 'auto'

|

||||

log_cli = true

|

||||

|

||||

[build-system]

|

||||

requires = ["poetry-core>=1.0.0"]

|

||||

build-backend = "poetry.core.masonry.api"

|

||||

|

||||

[tool.ruff.lint]

|

||||

# Enable Pyflakes (`F`) and a subset of the pycodestyle (`E`) codes by default.

|

||||

select = ["E4", "E7", "E9", "F", "I", "ASYNC", "N", "RUF", "ERA001"]

|

||||

ignore = []

|

||||

|

||||

# Allow fix for all enabled rules (when `--fix`) is provided.

|

||||

fixable = ["ALL"]

|

||||

unfixable = []

|

||||

|

||||

# Allow unused variables when underscore-prefixed.

|

||||

dummy-variable-rgx = "^(_+|(_+[a-zA-Z0-9_]*[a-zA-Z0-9]+?))$"

|

||||

|

||||

[tool.ruff.format]

|

||||

# Like Black, use double quotes for strings.

|

||||

quote-style = "double"

|

||||

|

||||

# Like Black, indent with spaces, rather than tabs.

|

||||

indent-style = "space"

|

||||

|

||||

# Like Black, respect magic trailing commas.

|

||||

skip-magic-trailing-comma = false

|

||||

|

||||

# Like Black, automatically detect the appropriate line ending.

|

||||

line-ending = "auto"

|

||||

|

|

|

|||

|

|

@ -1 +0,0 @@

|

|||

"""Rip: an easy to use command line utility for downloading audio streams."""

|

||||

|

|

@ -1,4 +0,0 @@

|

|||

"""Run the rip program."""

|

||||

from .cli import main

|

||||

|

||||

main()

|

||||

834

rip/cli.py

|

|

@ -1,834 +0,0 @@

|

|||

import concurrent.futures

|

||||

import logging

|

||||

import os

|

||||

import threading

|

||||

from typing import Optional

|

||||

|

||||

import requests

|

||||

from cleo.application import Application as BaseApplication

|

||||

from cleo.commands.command import Command

|

||||

from cleo.formatters.style import Style

|

||||

from cleo.helpers import argument, option

|

||||

from click import launch

|

||||

|

||||

from streamrip import __version__

|

||||

|

||||

from .config import Config

|

||||

from .core import RipCore

|

||||

|

||||

logging.basicConfig(level="WARNING")

|

||||

logger = logging.getLogger("streamrip")

|

||||

|

||||

outdated = False

|

||||

newest_version = __version__

|

||||

|

||||

|

||||

class DownloadCommand(Command):

|

||||

name = "url"

|

||||

description = "Download items using urls."

|

||||

|

||||

arguments = [

|

||||

argument(

|

||||

"urls",

|

||||

"One or more Qobuz, Tidal, Deezer, or SoundCloud urls",

|

||||

optional=True,

|

||||

multiple=True,

|

||||

)

|

||||

]

|

||||

options = [

|

||||

option(

|

||||

"file",

|

||||

"-f",

|

||||

"Path to a text file containing urls",

|

||||

flag=False,

|

||||

default="None",

|

||||

),

|

||||

option(

|

||||

"codec",

|

||||

"-c",

|

||||

"Convert the downloaded files to <cmd>ALAC</cmd>, <cmd>FLAC</cmd>, <cmd>MP3</cmd>, <cmd>AAC</cmd>, or <cmd>OGG</cmd>",

|

||||

flag=False,

|

||||

default="None",

|

||||

),

|

||||

option(

|

||||

"max-quality",

|

||||

"m",

|

||||

"The maximum quality to download. Can be <cmd>0</cmd>, <cmd>1</cmd>, <cmd>2</cmd>, <cmd>3 </cmd>or <cmd>4</cmd>",

|

||||

flag=False,

|

||||

default="None",

|

||||

),

|

||||

option(

|

||||

"ignore-db",

|

||||

"-i",

|

||||

description="Download items even if they have been logged in the database.",

|

||||

),

|

||||

option("config", description="Path to config file.", flag=False),

|

||||

option("directory", "-d", "Directory to download items into.", flag=False),

|

||||

]

|

||||

|

||||

help = (

|

||||

"\nDownload <title>Dreams</title> by <title>Fleetwood Mac</title>:\n"

|

||||

"$ <cmd>rip url https://www.deezer.com/us/track/67549262</cmd>\n\n"

|

||||

"Batch download urls from a text file named <path>urls.txt</path>:\n"

|

||||

"$ <cmd>rip url --file urls.txt</cmd>\n\n"

|

||||

"For more information on Quality IDs, see\n"

|

||||

"<url>https://github.com/nathom/streamrip/wiki/Quality-IDs</url>\n"

|

||||

)

|

||||

|

||||

def handle(self):

|

||||

global outdated

|

||||

global newest_version

|

||||

|

||||

# Use a thread so that it doesn't slow down startup

|

||||

update_check = threading.Thread(target=is_outdated, daemon=True)

|

||||

update_check.start()

|

||||

|

||||

path, codec, quality, no_db, directory, config = clean_options(

|

||||

self.option("file"),

|

||||

self.option("codec"),

|

||||

self.option("max-quality"),

|

||||

self.option("ignore-db"),

|

||||

self.option("directory"),

|

||||

self.option("config"),

|

||||

)

|

||||

|

||||

config = Config(config)

|

||||

|

||||

if directory is not None:

|

||||

config.session["downloads"]["folder"] = directory

|

||||

|

||||

if no_db:

|

||||

config.session["database"]["enabled"] = False

|

||||

|

||||

if quality is not None:

|

||||

for source in ("qobuz", "tidal", "deezer"):

|

||||

config.session[source]["quality"] = quality

|

||||

|

||||

core = RipCore(config)

|

||||

|

||||

urls = self.argument("urls")

|

||||

|

||||

if path is not None:

|

||||

if os.path.isfile(path):

|

||||

core.handle_txt(path)

|

||||

else:

|

||||

self.line(

|

||||

f"<error>File <comment>{path}</comment> does not exist.</error>"

|

||||

)

|

||||

return 1

|

||||

|

||||

if urls:

|

||||

core.handle_urls(";".join(urls))

|

||||

|

||||

if len(core) > 0:

|

||||

core.download()

|

||||

elif not urls and path is None:

|

||||

self.line("<error>Must pass arguments. See </><cmd>rip url -h</cmd>.")

|

||||

|

||||

update_check.join()

|

||||

if outdated:

|

||||

import re

|

||||

import subprocess

|

||||

|

||||

self.line(

|

||||

f"\n<info>A new version of streamrip <title>v{newest_version}</title>"

|

||||

" is available! Run <cmd>pip3 install streamrip --upgrade</cmd>"

|

||||

" to update.</info>\n"

|

||||

)

|

||||

|

||||

md_header = re.compile(r"#\s+(.+)")

|

||||

bullet_point = re.compile(r"-\s+(.+)")

|

||||

code = re.compile(r"`([^`]+)`")

|

||||

issue_reference = re.compile(r"(#\d+)")

|

||||

|

||||

release_notes = requests.get(

|

||||

"https://api.github.com/repos/nathom/streamrip/releases/latest"

|

||||

).json()["body"]

|

||||

|

||||

release_notes = md_header.sub(r"<header>\1</header>", release_notes)

|

||||

release_notes = bullet_point.sub(r"<options=bold>•</> \1", release_notes)

|

||||

release_notes = code.sub(r"<cmd>\1</cmd>", release_notes)

|

||||

release_notes = issue_reference.sub(r"<options=bold>\1</>", release_notes)

|

||||

|

||||

self.line(release_notes)

|

||||

|

||||

return 0

|

||||

|

||||

|

||||

class SearchCommand(Command):

|

||||

name = "search"

|

||||

description = "Search for an item"

|

||||

arguments = [

|

||||

argument(

|

||||

"query",

|

||||

"The name to search for",

|

||||

optional=False,

|

||||

multiple=False,

|

||||

)

|

||||

]

|

||||

options = [

|

||||

option(

|

||||

"source",

|

||||

"-s",

|

||||

"Qobuz, Tidal, Soundcloud, Deezer, or Deezloader",

|

||||

flag=False,

|

||||

default="qobuz",

|

||||

),

|

||||

option(

|

||||

"type",

|

||||

"-t",

|

||||

"Album, Playlist, Track, or Artist",

|

||||

flag=False,

|

||||

default="album",

|

||||

),

|

||||

]

|

||||

|

||||

help = (

|

||||

"\nSearch for <title>Rumours</title> by <title>Fleetwood Mac</title>\n"

|

||||

"$ <cmd>rip search 'rumours fleetwood mac'</cmd>\n\n"

|

||||

"Search for <title>444</title> by <title>Jay-Z</title> on TIDAL\n"

|

||||

"$ <cmd>rip search --source tidal '444'</cmd>\n\n"

|

||||

"Search for <title>Bob Dylan</title> on Deezer\n"

|

||||

"$ <cmd>rip search --type artist --source deezer 'bob dylan'</cmd>\n"

|

||||

)

|

||||

|

||||

def handle(self):

|

||||

query = self.argument("query")

|

||||

source, type = clean_options(self.option("source"), self.option("type"))

|

||||

|

||||

config = Config()

|

||||

core = RipCore(config)

|

||||

|

||||

if core.interactive_search(query, source, type):

|

||||

core.download()

|

||||

else:

|

||||

self.line("<error>No items chosen, exiting.</error>")

|

||||

|

||||

|

||||

class DiscoverCommand(Command):

|

||||

name = "discover"

|

||||

description = "Download items from the charts or a curated playlist"

|

||||

arguments = [

|

||||

argument(

|

||||

"list",

|

||||

"The list to fetch",

|

||||

optional=True,

|

||||

multiple=False,

|

||||

default="ideal-discography",

|

||||

)

|

||||

]

|

||||

options = [

|

||||

option(

|

||||

"scrape",

|

||||

description="Download all of the items in the list",

|

||||

),

|

||||

option(

|

||||

"max-items",

|

||||

"-m",

|

||||

description="The number of items to fetch",

|

||||

flag=False,

|

||||

default=50,

|

||||

),

|

||||

option(

|

||||

"source",

|

||||

"-s",

|

||||

description="The source to download from (<cmd>qobuz</cmd> or <cmd>deezer</cmd>)",

|

||||

flag=False,

|

||||

default="qobuz",

|

||||

),

|

||||

]

|

||||

help = (

|

||||

"\nBrowse the Qobuz ideal-discography list\n"

|

||||

"$ <cmd>rip discover</cmd>\n\n"

|

||||

"Browse the best-sellers list\n"

|

||||

"$ <cmd>rip discover best-sellers</cmd>\n\n"

|

||||

"Available options for Qobuz <cmd>list</cmd>:\n\n"

|

||||

" • most-streamed\n"

|

||||

" • recent-releases\n"

|

||||

" • best-sellers\n"

|

||||

" • press-awards\n"

|

||||

" • ideal-discography\n"

|

||||

" • editor-picks\n"

|

||||

" • most-featured\n"

|

||||

" • qobuzissims\n"

|

||||

" • new-releases\n"

|

||||

" • new-releases-full\n"

|

||||

" • harmonia-mundi\n"

|

||||

" • universal-classic\n"

|

||||

" • universal-jazz\n"

|

||||

" • universal-jeunesse\n"

|

||||

" • universal-chanson\n\n"

|

||||

"Browse the Deezer editorial releases list\n"

|

||||

"$ <cmd>rip discover --source deezer</cmd>\n\n"

|

||||

"Browse the Deezer charts\n"

|

||||

"$ <cmd>rip discover --source deezer charts</cmd>\n\n"

|

||||

"Available options for Deezer <cmd>list</cmd>:\n\n"

|

||||

" • releases\n"

|

||||

" • charts\n"

|

||||

" • selection\n"

|

||||

)

|

||||

|

||||

def handle(self):

|

||||

source = self.option("source")

|

||||

scrape = self.option("scrape")

|

||||

chosen_list = self.argument("list")

|

||||

max_items = self.option("max-items")

|

||||

|

||||

if source == "qobuz":

|

||||

from streamrip.constants import QOBUZ_FEATURED_KEYS

|

||||

|

||||

if chosen_list not in QOBUZ_FEATURED_KEYS:

|

||||

self.line(f'<error>Error: list "{chosen_list}" not available</error>')

|

||||

self.line(self.help)

|

||||

return 1

|

||||

elif source == "deezer":

|

||||

from streamrip.constants import DEEZER_FEATURED_KEYS

|

||||

|

||||

if chosen_list not in DEEZER_FEATURED_KEYS:

|

||||

self.line(f'<error>Error: list "{chosen_list}" not available</error>')

|

||||

self.line(self.help)

|

||||

return 1

|

||||

|

||||

else:

|

||||

self.line(

|

||||

"<error>Invalid source. Choose either <cmd>qobuz</cmd> or <cmd>deezer</cmd></error>"

|

||||

)

|

||||

return 1

|

||||

|

||||

config = Config()

|

||||

core = RipCore(config)

|

||||

|

||||

if scrape:

|

||||

core.scrape(chosen_list, max_items)

|

||||

core.download()

|

||||

return 0

|

||||

|

||||

if core.interactive_search(

|

||||

chosen_list, source, "featured", limit=int(max_items)

|

||||

):

|

||||

core.download()

|

||||

else:

|

||||

self.line("<error>No items chosen, exiting.</error>")

|

||||

|

||||

return 0

|

||||

|

||||

|

||||

class LastfmCommand(Command):

|

||||

name = "lastfm"

|

||||

description = "Search for tracks from a last.fm playlist and download them."

|

||||

|

||||

arguments = [

|

||||

argument(

|

||||

"urls",

|

||||

"Last.fm playlist urls",

|

||||

optional=False,

|

||||

multiple=True,

|

||||

)

|

||||

]

|

||||

options = [

|

||||

option(

|

||||

"source",

|

||||

"-s",

|

||||

description="The source to search for items on",

|

||||

flag=False,

|

||||

default="qobuz",

|

||||

),

|

||||

]

|

||||

help = (

|

||||

"You can use this command to download Spotify, Apple Music, and YouTube "

|

||||

"playlists.\nTo get started, create an account at "

|

||||

"<url>https://www.last.fm</url>. Once you have\nreached the home page, "

|

||||

"go to <path>Profile Icon</path> => <path>View profile</path> => "

|

||||

"<path>Playlists</path> => <path>IMPORT</path>\nand paste your url.\n\n"

|

||||

"Download the <info>young & free</info> Apple Music playlist (already imported)\n"

|

||||

"$ <cmd>rip lastfm https://www.last.fm/user/nathan3895/playlists/12089888</cmd>\n"

|

||||

)

|

||||

|

||||

def handle(self):

|

||||

source = self.option("source")

|

||||

urls = self.argument("urls")

|

||||

|

||||

config = Config()

|

||||

core = RipCore(config)

|

||||

config.session["lastfm"]["source"] = source

|

||||

core.handle_lastfm_urls(";".join(urls))

|

||||

core.download()

|

||||

|

||||

|

||||

class ConfigCommand(Command):

|

||||

name = "config"

|

||||

description = "Manage the configuration file."

|

||||

|

||||

options = [

|

||||

option(

|

||||

"open",

|

||||

"-o",

|

||||

description="Open the config file in the default application",

|

||||

flag=True,

|

||||

),

|

||||

option(

|

||||

"open-vim",

|

||||

"-O",

|

||||

description="Open the config file in (neo)vim",

|

||||

flag=True,

|

||||

),

|

||||

option(

|

||||

"directory",

|

||||

"-d",

|

||||

description="Open the directory that the config file is located in",

|

||||

flag=True,

|

||||

),

|

||||

option("path", "-p", description="Show the config file's path", flag=True),

|

||||

option("qobuz", description="Set the credentials for Qobuz", flag=True),

|

||||

option("tidal", description="Log into Tidal", flag=True),

|

||||

option("deezer", description="Set the Deezer ARL", flag=True),

|

||||

option(

|

||||

"music-app",

|

||||

description="Configure the config file for usage with the macOS Music App",

|

||||

flag=True,

|

||||

),

|

||||

option("reset", description="Reset the config file", flag=True),

|

||||

option(

|

||||

"--update",

|

||||

description="Reset the config file, keeping the credentials",

|

||||

flag=True,

|

||||

),

|

||||

]

|

||||

"""

|

||||

Manage the configuration file.

|

||||

|

||||

config

|

||||

{--o|open : Open the config file in the default application}

|

||||

{--O|open-vim : Open the config file in (neo)vim}

|

||||

{--d|directory : Open the directory that the config file is located in}

|

||||

{--p|path : Show the config file's path}

|

||||

{--qobuz : Set the credentials for Qobuz}

|

||||

{--tidal : Log into Tidal}

|

||||

{--deezer : Set the Deezer ARL}

|

||||

{--music-app : Configure the config file for usage with the macOS Music App}

|

||||

{--reset : Reset the config file}

|

||||

{--update : Reset the config file, keeping the credentials}

|

||||

"""

|

||||

|

||||

_config: Optional[Config]

|

||||

|

||||

def handle(self):

|

||||

import shutil

|

||||

|

||||

from .constants import CONFIG_DIR, CONFIG_PATH

|

||||

|

||||

self._config = Config()

|

||||

|

||||

if self.option("path"):

|

||||

self.line(f"<info>{CONFIG_PATH}</info>")

|

||||

|

||||

if self.option("open"):

|

||||

self.line(f"Opening <url>{CONFIG_PATH}</url> in default application")

|

||||

launch(CONFIG_PATH)

|

||||

|

||||

if self.option("reset"):

|

||||

self._config.reset()

|

||||

|

||||

if self.option("update"):

|

||||

self._config.update()

|

||||

|

||||

if self.option("open-vim"):

|

||||

if shutil.which("nvim") is not None:

|

||||

os.system(f"nvim '{CONFIG_PATH}'")

|

||||

else:

|

||||

os.system(f"vim '{CONFIG_PATH}'")

|

||||

|

||||

if self.option("directory"):

|

||||

self.line(f"Opening <url>{CONFIG_DIR}</url>")

|

||||

launch(CONFIG_DIR)

|

||||

|

||||

if self.option("tidal"):

|

||||

from streamrip.clients import TidalClient

|

||||

|

||||

client = TidalClient()

|

||||

client.login()

|

||||

self._config.file["tidal"].update(client.get_tokens())

|

||||

self._config.save()

|

||||

self.line("<info>Credentials saved to config.</info>")

|

||||

|

||||

if self.option("deezer"):

|

||||

from streamrip.clients import DeezerClient

|

||||

from streamrip.exceptions import AuthenticationError

|

||||

|

||||

self.line(

|

||||

"Follow the instructions at <url>https://github.com"

|

||||

"/nathom/streamrip/wiki/Finding-your-Deezer-ARL-Cookie</url>"

|

||||

)

|

||||

|

||||

given_arl = self.ask("Paste your ARL here: ").strip()

|

||||

self.line("<comment>Validating arl...</comment>")

|

||||

|

||||

try:

|

||||

DeezerClient().login(arl=given_arl)

|

||||

self._config.file["deezer"]["arl"] = given_arl

|

||||

self._config.save()

|

||||

self.line("<b>Sucessfully logged in!</b>")

|

||||

|

||||

except AuthenticationError:

|

||||

self.line("<error>Could not log in. Double check your ARL</error>")

|

||||

|

||||

if self.option("qobuz"):

|

||||

import getpass

|

||||

import hashlib

|

||||

|

||||

self._config.file["qobuz"]["email"] = self.ask("Qobuz email:")

|

||||

self._config.file["qobuz"]["password"] = hashlib.md5(

|

||||

getpass.getpass("Qobuz password (won't show on screen): ").encode()

|

||||

).hexdigest()

|

||||

self._config.save()

|

||||

|

||||

if self.option("music-app"):

|

||||

self._conf_music_app()

|

||||

|

||||

def _conf_music_app(self):

|

||||

import subprocess

|

||||

import xml.etree.ElementTree as ET

|

||||

from pathlib import Path

|

||||

from tempfile import mktemp

|

||||

|

||||

# Find the Music library folder

|

||||

temp_file = mktemp()

|

||||

music_pref_plist = Path(Path.home()) / Path(

|

||||

"Library/Preferences/com.apple.Music.plist"

|

||||

)

|

||||

# copy preferences to tempdir

|

||||

subprocess.run(["cp", music_pref_plist, temp_file])

|

||||

# convert binary to xml for parsing

|

||||

subprocess.run(["plutil", "-convert", "xml1", temp_file])

|

||||

items = iter(ET.parse(temp_file).getroot()[0])

|

||||

|

||||

for item in items:

|

||||

if item.text == "NSNavLastRootDirectory":

|

||||

break

|

||||

|

||||

library_folder = Path(next(items).text)

|

||||

os.remove(temp_file)

|

||||

|

||||

# cp ~/library/preferences/com.apple.music.plist music.plist

|

||||

# plutil -convert xml1 music.plist

|

||||

# cat music.plist | pbcopy

|

||||

|

||||

self._config.file["downloads"]["folder"] = os.path.join(

|

||||

library_folder, "Automatically Add to Music.localized"

|

||||

)

|

||||

|

||||

conversion_config = self._config.file["conversion"]

|

||||

conversion_config["enabled"] = True

|

||||

conversion_config["codec"] = "ALAC"

|

||||

conversion_config["sampling_rate"] = 48000

|

||||

conversion_config["bit_depth"] = 24

|

||||

|

||||

self._config.file["filepaths"]["folder_format"] = ""

|

||||

self._config.file["artwork"]["keep_hires_cover"] = False

|

||||

self._config.save()

|

||||

|

||||

|

||||

class ConvertCommand(Command):

|

||||

name = "convert"

|

||||

description = (

|

||||

"A standalone tool that converts audio files to other codecs en masse."

|

||||

)

|

||||

arguments = [

|

||||

argument(

|

||||

"codec",

|

||||

description="<cmd>FLAC</cmd>, <cmd>ALAC</cmd>, <cmd>OPUS</cmd>, <cmd>MP3</cmd>, or <cmd>AAC</cmd>.",

|

||||

),

|

||||

argument(

|

||||

"path",

|

||||

description="The path to the audio file or a directory that contains audio files.",

|

||||

),

|

||||

]

|

||||

options = [

|

||||

option(

|

||||

"sampling-rate",

|

||||

"-s",

|

||||

description="Downsample the tracks to this rate, in Hz.",

|

||||

default=192000,

|

||||

flag=False,

|

||||

),

|

||||

option(

|

||||

"bit-depth",

|

||||

"-b",

|

||||

description="Downsample the tracks to this bit depth.",

|

||||

default=24,

|

||||

flag=False,

|

||||

),

|

||||

option(

|

||||

"keep-source", "-k", description="Keep the original file after conversion."

|

||||

),

|

||||

]

|

||||

|

||||

help = (

|

||||

"\nConvert all of the audio files in <path>/my/music</path> to MP3s\n"

|

||||

"$ <cmd>rip convert MP3 /my/music</cmd>\n\n"

|

||||

"Downsample the audio to 48kHz after converting them to ALAC\n"

|

||||

"$ <cmd>rip convert --sampling-rate 48000 ALAC /my/music\n"

|

||||

)

|

||||

|

||||

def handle(self):

|

||||

from streamrip import converter

|

||||

|

||||

CODEC_MAP = {

|

||||

"FLAC": converter.FLAC,

|

||||

"ALAC": converter.ALAC,

|

||||

"OPUS": converter.OPUS,

|

||||

"MP3": converter.LAME,

|

||||

"AAC": converter.AAC,

|

||||

}

|

||||

|

||||

codec = self.argument("codec")

|

||||

path = self.argument("path")

|

||||

|

||||

ConverterCls = CODEC_MAP.get(codec.upper())

|

||||

if ConverterCls is None:

|

||||

self.line(

|

||||

f'<error>Invalid codec "{codec}". See </error><cmd>rip convert'

|

||||

" -h</cmd>."

|

||||

)

|

||||

return 1

|

||||

|

||||

sampling_rate, bit_depth, keep_source = clean_options(

|

||||

self.option("sampling-rate"),

|

||||

self.option("bit-depth"),

|

||||

self.option("keep-source"),

|

||||

)

|

||||

|

||||

converter_args = {

|

||||

"sampling_rate": sampling_rate,

|

||||

"bit_depth": bit_depth,

|

||||

"remove_source": not keep_source,

|

||||

}

|

||||

|

||||

if os.path.isdir(path):

|

||||

import itertools

|

||||

from pathlib import Path

|

||||

|

||||

from tqdm import tqdm

|

||||

|

||||

dirname = path

|

||||

audio_extensions = ("flac", "m4a", "aac", "opus", "mp3", "ogg")

|

||||

path_obj = Path(dirname)

|

||||

audio_files = (

|

||||

path.as_posix()

|

||||

for path in itertools.chain.from_iterable(

|

||||

(path_obj.rglob(f"*.{ext}") for ext in audio_extensions)

|

||||

)

|

||||

)

|

||||

|

||||

with concurrent.futures.ThreadPoolExecutor() as executor:

|

||||

futures = []

|

||||

for file in audio_files:

|

||||

futures.append(

|